We’ve been developing FileMaker plug-ins since the release of FileMaker Pro 4, so some of our products have been there for our users for over a decade. We would like to share with you a few notes on how to make a product successful.

We’ve been developing FileMaker plug-ins since the release of FileMaker Pro 4, so some of our products have been there for our users for over a decade. We would like to share with you a few notes on how to make a product successful.

(This post is written about developing FileMaker plug-ins, but it is applicable to all software development in general.)

No matter how precisely you plan the future of your product, there will aways be things you can’t predict, especially in software development. A lot has changed since we’ve released our first plug-ins. Operating systems have changed, hardware has changed, the FileMaker platform has changed and even our user requirements have changed. We also have changed, and so therefore our products have changed.

We grew and our product became better. I say “only better”, because an ideal version of our product would contain all the features our clients ask for, would contain no bugs and we would make the product compatible with current and future environments and systems. But that is hard to achieve and often not economically viable. So we are pushing our products closer to this ideal with this motto: “Every new version of a product has to be better than the previous one.”That is much more realistic and measurable.

There are two key processes to achieving this:

- Keeping track of all the issues/tasks.

- Testing all the current and previous versions’ issues before the product is released.

We use Redmine to keep track of all the bugs, issues and feature requests. It doesn’t matter if they are reported by us (we do use our plug-ins, too), through our helpdesk system) or through other channels. They are all recorded in one system, categorized, assigned a status and either included in some version or held for the future.

Whenever a new plug-in version initiates from this list containing new features, bug fixes or both, there has to be comprehensive testing to ensure that nothing else breaks by those changes. And that can’t be accomplished by random testing, it has to be systematic, it has to be written somewhere and it has to be easily repeated with the current and every future version of the product. So our “acceptance tests list” was born.

It is a combination of positive tests, negative tests, boundary tests, UI tests, integration tests and so on. This list evolves with the product as new features are added or removed, as new issues are found and fixed. Nevertheless having one such list for each product is sufficient to determine which version of a product is better and passes more tests.

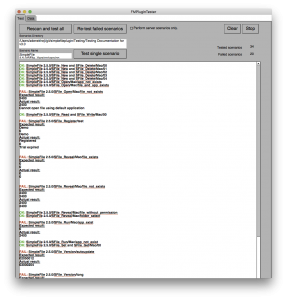

To make it easier for us, we have developed a FileMaker solution for our acceptance tests list. No paperwork, no complex requirements from the tester, just one database with all the tests. The process of testing then consists of these simple steps:

- click the “execute test” button

- compare the actual result with the expected result

- mark the test as done

- go to the next test

To keep the testing as simple as possible, a lot of tests could be written in a way that the tester just compares the resulted output with an “ok” string. However a lot of plug-in functions can’t be tested that easily. For example in our SimpleDialog plug-in almost all of the tests are related to creating new dialogs and windows. When testing this, the tester has to be fully focused on what appears and sometimes even do a few steps as addressed in the test. The tester then has to evaluate if it looked and behaved as written in the test.

So there is no general solution to make all the tests simple, but at least all the tests can be divided into 2 lists. Those that require tester interaction and those that do not. And the latter ones can be evaluated all at once with a single button click. And that is a real time saver. Then the only slow down is creating the tests in the beginning. But there are ways to automate that, too.

For example in our SimpleFile plug-in we tried to think of all the combinations for our file handling functions (functions as simple as moving a file from one folder to another) and it was plentiful. All those combinations of relative and absolute paths, file existence, folder existence, folder permissions, file permissions… and it grew exponentially with each factor we tried to cover. So we ended up with a script that generates hundreds of tests for us. It prepares all the testing folders and files and generates all the tests for our testing solution. Then the only remaining challenge is to automatically generate the expected outputs, especially the error codes it should return when a condition (or more of them at once) fails. After that all those tests can be tested by a single click and the test statistics will be available.

We always have ideas as to how to move our development and testing processes one step further. And as we focus on developing better and better products, we have to apply our ever-improving motto to our testing process too. Only that way we can both learn from the past and be prepared for future changes.

That’s our testing approach in a nutshell. And how do you test your products?